- Ibm speech to text prototype install#

- Ibm speech to text prototype code#

- Ibm speech to text prototype trial#

Ibm speech to text prototype code#

Separate third-party code objects invoked within this code pattern are licensed by their respective providers pursuant to their own separate licenses. This code pattern is licensed under the Apache License, Version 2. See DEVELOPING.md and TESTING.md for more details about developing and testing this app. Use the Upload file button to transcribe audio from a file. Press the button again to stop (the button label becomes Stop recording). The Watson Speech to Text service leverages machine learning to combine knowledge of grammar, language structure, and the composition of audio and voice signals to accurately transcribe the human voice from many languages. Press the Record your own button to transcribe audio from your microphone. Press the Play audio sample button to hear our example audio and watch as it is transcribed. Select an input Language model (defaults to English). Deploy the serverĬlick on one of the options below for instructions on deploying the Node.js server. If you need to find the service later, use the main navigation menu (☰) and select Resource list to find the service under Services.Ĭlick on the service name to get back to the Manage view (where you can collect the API Key and URL).

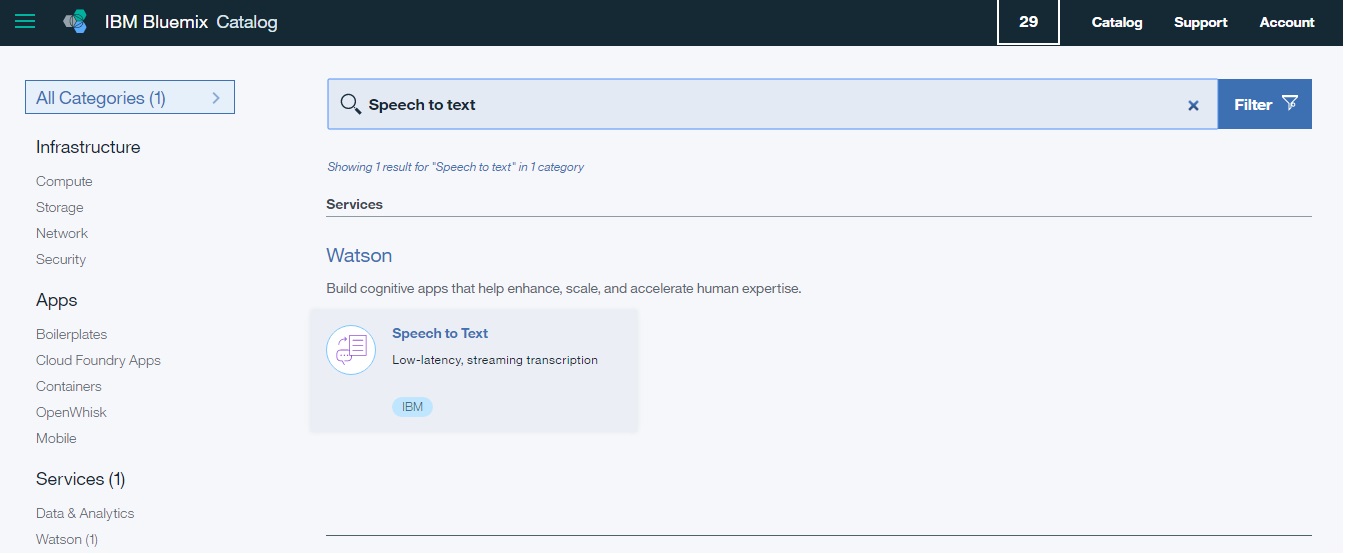

Click here to create a Speech to Text instance.

Ibm speech to text prototype trial#

If you do not have an IBM Cloud account, register for a free trial account here.This is the SPEECH_TO_TEXT_USERNAME (and SPEECH_TO_TEXT_PASSWORD) you will use when you configure credentials to allow the Node.js server to authenticate. Use the Menu and select Users and + Add user to grant your user access to this service instance.It is not recommended to use the bearer token except during testing and development because that token does not expire. Optionally, copy the Bearer token to use in development testing only.Copy the URL to use as the SPEECH_TO_TEXT_URL when you configure credentials.On the Provisioned instances tab, find your service instance, and then hover over the last column to find and click the ellipses icon.From the main navigation menu (☰), select My instances.From the main navigation menu (☰), select Administer > Manage users and then + New user. For production use, create a user to use for authentication.To determine whether the service is installed, click the Services icon ( ) and check whether the service is enabled.

Ibm speech to text prototype install#

An administrator must install it on the IBM Cloud Pak for Data platform, and you must be given access to the service. The instructions will depend on whether you are provisioning services using IBM Cloud Pak for Data or on IBM Cloud.Ĭlick to expand one: IBM Cloud Pak for Data As the data is processed, the Speech to Text service returns information about extracted text and other metadata to the application to display.The application sends the audio data to the Watson Speech to Text service through a WebSocket connection.User supplies an audio input to the application (running locally, in the IBM Cloud or in IBM Cloud Pak for Data).Sample React app for playing around with the Watson Speech to Text service. The repository will be kept available in read-only mode. RecognizeStream.pipe(fs.createWriteStream('./transcription.WARNING: This repository is no longer maintained ⚠️ Finally pipe translation to transcription file pipe(process.stdout) if you dont want translation printed to consoleĪudioRecorder.start().stream().pipe(recognizeStream).pipe(process.stdout) The service leverages machine learning to combine knowledge of grammar, language structure, and the composition of audio and voice signals to accurately transcribe the human voice. The service provides speech transcription capabilities for your applications. TextStream.on('error', e => console.log(`error: $) Watson Speech to Text service is part of AI services available in IBM Cloud. TextStream.on('data', user_speech_text => console.log('Watson hears:', user_speech_text)) Var textStream = micInputStream.pipe(recognizeStream).setEncoding('utf8') Var recognizeStream = speechToText.recognizeUsingWebSocket(params) Var SpeechToTextV1 = require('watson-developer-cloud/speech-to-text/v1') Ĭontent_type: 'audio/l16 rate=44100 channels=2',Ĭonst micInputStream = micInstance.getAudioStream() Ĭonsole.log('Watson is listening, you may speak now.') I am fairly new to NodeJS / javascript so I'm hoping the error might be obvious.

wav file to Watson and I have also tested piping micInputStream to my local files so I know both are at least set up correctly. I am currently trying to send a microphone stream to Watson STT service but for some reason, the Watson service is not receiving the stream (I'm guessing) so I get the error "Error: No speech detected for 30s".

0 kommentar(er)

0 kommentar(er)